Clear real-time voice has become something people expect, whether joining an online class, coordinating in a game, or chatting casually with friends. When voices arrive late or break apart, conversations quickly feel tiring and unnatural. Many users simply describe the problem as “lag,” but the real causes are spread across networks, servers, software, and devices. Understanding these technical layers helps explain why some voice chat rooms feel effortless while others struggle. Small design decisions at each stage can add up to either a smooth experience or constant interruptions. In this article, the technical reasons behind voice chat latency and practical optimization strategies are explained in an accessible way.

1. Network Architecture & Infrastructure

Server Geographic Distribution & Edge Networks

Distance between users and servers plays a major role in how fast audio can travel. When a speaker’s voice must cross continents before reaching a processing center, delay becomes unavoidable. Distributed edge networks reduce this problem by placing entry points closer to users around the world. Audio data can then take shorter routes, lowering round-trip transmission time. This approach is similar to using local service centers instead of sending every request to a single headquarters. The more evenly servers are spread, the more consistent voice performance becomes globally. Good geographic distribution lays the groundwork for low-latency communication.

Packet Routing Efficiency and ISP Peering

Even with nearby servers, audio packets still depend on the efficiency of internet routing paths. Data does not always travel in straight lines, and poor routing agreements between networks can add unnecessary detours. Internet Service Provider peering arrangements influence how directly traffic flows between regions. When routing is optimized, packets encounter fewer handoffs and less congestion. Fewer network hops generally mean lower delay and reduced packet loss. Monitoring real-world routing paths helps identify bottlenecks that affect voice quality. Strong infrastructure partnerships quietly improve conversations without users ever noticing the complexity behind the scenes.

2. Audio Processing & Protocols

Codec Choice: Opus vs. Legacy Codecs

Audio codecs determine how voice is compressed and transmitted across networks. Modern codecs such as Opus are designed specifically for real-time communication and can adapt to changing bandwidth conditions. Older legacy codecs often require more data or handle packet loss poorly, which leads to robotic or delayed audio. Efficient compression reduces transmission time while preserving clarity. Adaptive codecs can also lower bitrate automatically when connections weaken, keeping speech understandable. Choosing the right codec is one of the simplest ways to improve perceived responsiveness. Codec performance directly affects how natural conversations feel.

Real-Time Transport Protocol (RTP/WebRTC) Implementation

Protocols define how audio data is packaged and delivered between devices. Real-time systems rely on technologies built for speed rather than perfect accuracy, since delayed audio is worse than minor quality loss. RTP and WebRTC-based implementations prioritize low latency and include mechanisms for handling jitter and packet reordering. Fine-tuning buffer sizes and retransmission rules prevents unnecessary waiting. Poorly configured protocols can introduce hidden delays that stack up over time. Careful implementation ensures that audio flows continuously even when networks are unstable. Protocol efficiency is a key factor separating smooth calls from frustrating ones.

3. Backend Server Performance

Media Server Load Balancing and Scaling

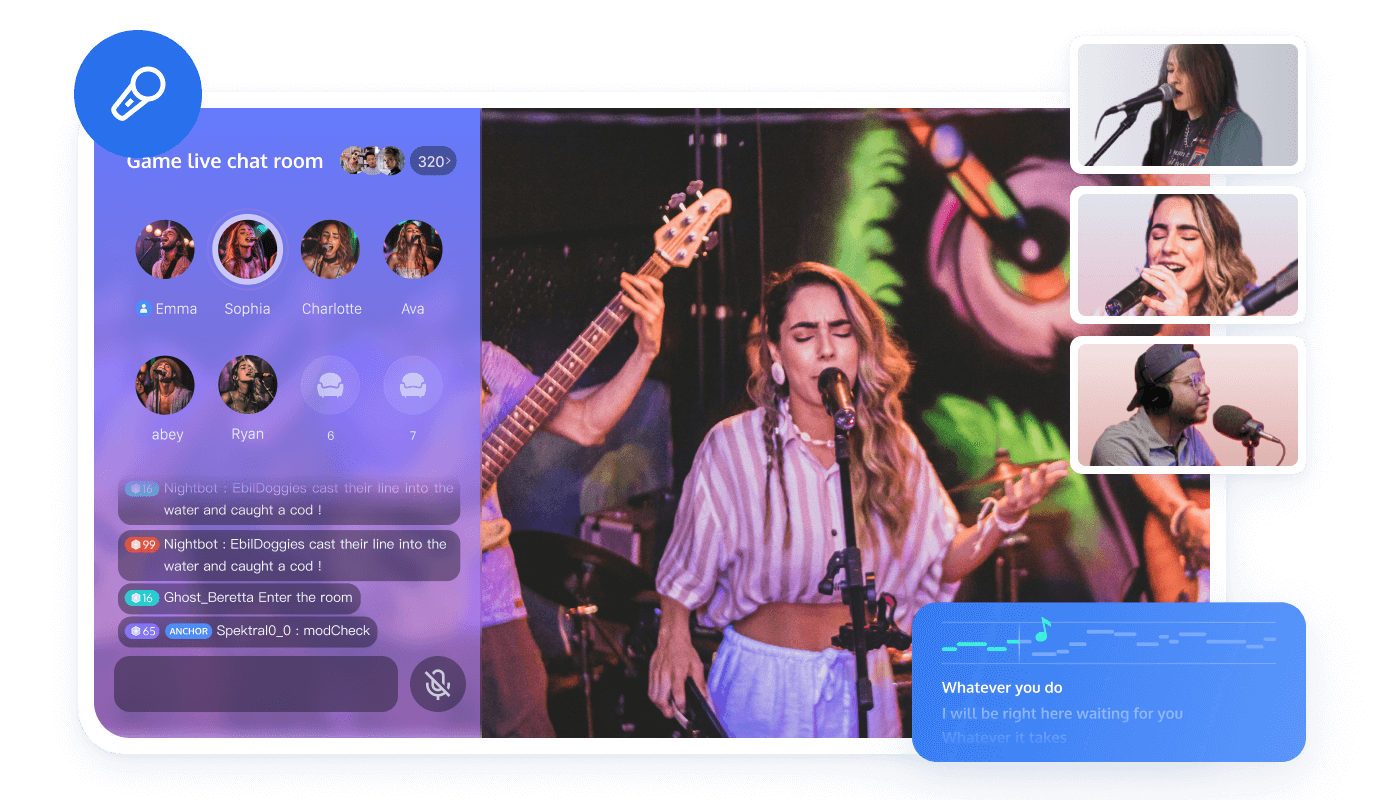

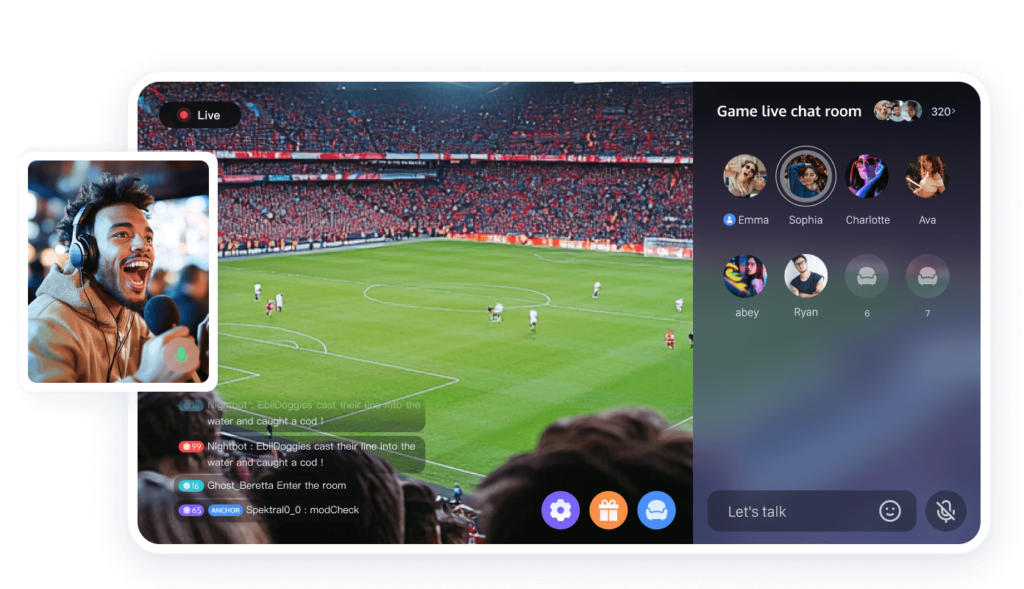

Media servers mix, forward, or route audio streams between participants. When too many users connect to the same server, processing queues grow and delay increases. Load balancing spreads sessions across multiple servers to prevent overload, while automatic scaling adds more computing resources during busy periods—such as live events or peak evening hours—to keep performance stable as user numbers fluctuate. For teams looking to implement such capabilities efficiently, integrated solutions like Tencent’s RTC Voice Chat Room offer a ready-to-deploy option. Combining global coverage with low-latency media handling and built-in room management, they help reduce deployment complexity without sacrificing responsiveness. This ensures that conversation quality remains consistent, even as user numbers grow.

Optimizing SFU vs. MCU Architectures

Different server architectures handle multi-user audio in different ways. A Selective Forwarding Unit, or SFU, relays individual streams without mixing them, reducing processing delay. A Multipoint Control Unit, or MCU, mixes audio on the server, which can simplify clients but increases server workload. SFUs generally provide lower latency for interactive voice, especially in larger groups. MCUs may still be useful where bandwidth is limited or device capabilities vary widely. Choosing the right architecture depends on expected room size and interaction style. Thoughtful design prevents scaling issues before they appear.

4. Client-Side Implementation

Efficient Audio Capture and Playback Buffers

User devices play a critical role in voice performance. Microphone capture settings and playback buffer sizes influence how quickly audio is processed. Buffers that are too large add delay, while buffers that are too small may cause stuttering. Efficient audio pipelines minimize processing overhead without sacrificing stability. Hardware differences between phones, tablets, and computers also affect performance. Careful client optimization ensures consistent experiences across devices. Smooth local processing complements strong network design.

Network Jitter Buffer Algorithms

Network conditions fluctuate constantly, causing packets to arrive at uneven intervals. Jitter buffers temporarily store incoming audio to smooth out these variations. Smart algorithms adjust buffer size dynamically based on current network behavior. If buffers are too conservative, they introduce noticeable delay. If too aggressive, audio may become choppy during instability. Adaptive jitter management balances continuity with responsiveness. This invisible process plays a major role in how natural a conversation feels.

5. Diagnostics & Optimization Tools

Effective optimization depends on accurate measurement. Real-time analytics can track latency, packet loss, and jitter across regions and device types. Visual dashboards make it easier to detect patterns such as peak-hour slowdowns. Alerts allow technical teams to respond quickly before users are affected. Long-term data supports better infrastructure planning and codec tuning. Continuous monitoring turns performance improvement into an ongoing process rather than a one-time task.

Conclusion

Voice chat quality depends on a chain of technical factors working together smoothly. Network distance, routing efficiency, codec selection, server architecture, and device optimization all influence latency. Weakness in any layer can turn a simple conversation into a frustrating experience. Careful engineering and ongoing monitoring help maintain natural, real-time interaction even as usage grows. When each component is tuned correctly, digital voice communication can feel almost as immediate as speaking face to face.